Photo by Andrew Neel on Unsplash

Huffman Coding Algorithm For Image Data Compression

by Paritosh Tripathi

Table of Contents

- Introduction

- Example

- Algorithm

- Explanation

- Implementation

- Takeaway: Huffman Coding is a technique to encode data to reduce its size without losing information.

Introduction

In this article, we’ll discuss the concept of Huffman Coding.

Huffman Coding is a technique of compressing data to reduce its size without losing any of the details. It was first developed by David Huffman.

It is an entropy-based algorithm that relies on an analysis of the frequency of symbols in an array. Based on this, it constructs a variable-length code table for encoding a source symbol (such as a character in a file).

Huffman Coding uses a specific method for choosing the representation for each symbol, resulting in a prefix code (sometimes called “prefix-free codes”, that is, the bit string representing some particular symbol is never a prefix of the bit string representing any other symbol) that expresses the most common characters using shorter strings of bits than are used for less common source symbols.

Huffman Coding is generally useful to compress the data in which there are frequently occurring characters. It can be used to compress all sorts of data including text, audio and images.

Example

Let’s take an example to understand how Huffman coding works:

Suppose we want to compress a file that contains the following characters and their frequencies:

a – 5

b – 9

c – 12

d – 13

e – 16

f – 45

If we use Fixed Length Code, it will be of length 8 (for each character) as there are a total of 256 ASCII characters. So the total size of the file would be 8*(5+9+12+13+16+45) = 780 bits.

Algorithm

The Huffman algorithm works by identifying symbols that are used more frequently and assigning shorter codewords to those symbols. This allows it to represent the most frequent characters with the fewest bits, which results in a compressed file.

The algorithm begins by calculating the frequency of all characters in the input stream (file). The next step is to construct a binary tree from these frequencies. The tree is traversed to create the codeword for each character: 0 represents going left and 1 represents going right. The rightmost leaf node corresponds to an end-of-data marker, while all other leaf nodes correspond to input characters or symbols.

This is the Algorithm for the Hoffman, a detailed explanation and Implementation will be after this.

create a priority queue Q consisting of each unique character.

sort then in ascending order of their frequencies.

for all the unique characters:

create a newNode

extract minimum value from Q and assign it to leftChild of newNode

extract minimum value from Q and assign it to rightChild of newNode

calculate the sum of these two minimum values and assign it to the value of newNode

insert this newNode into the tree

return rootNode

Explanation

Huffman coding is done with the help of the following steps.

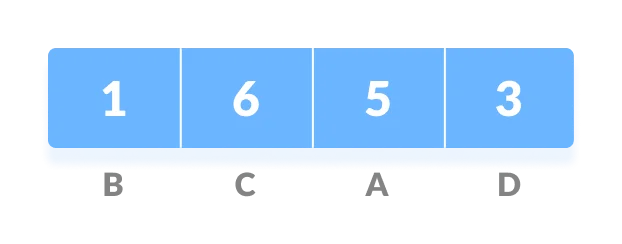

Step - 1 Calculate the frequency of each character in the string.

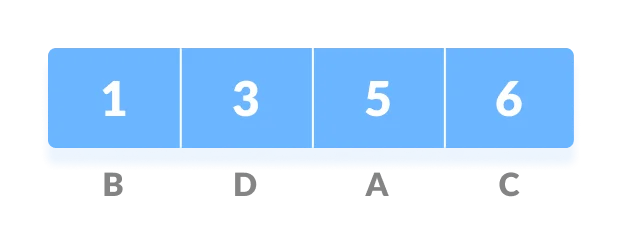

Step - 2 Sort the characters in increasing order of the frequency. These are stored in a priority queue Q.

Step - 3 Make each unique character as a leaf node.

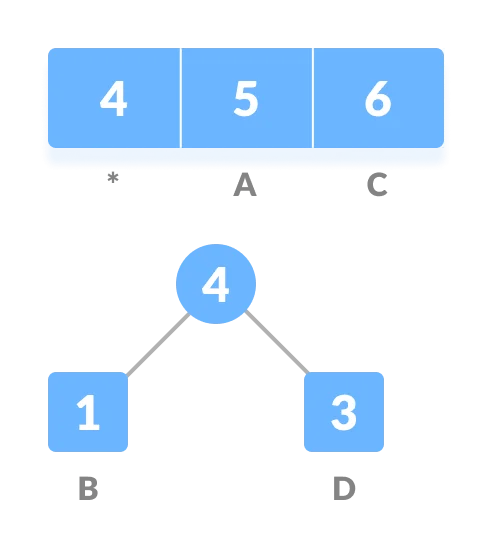

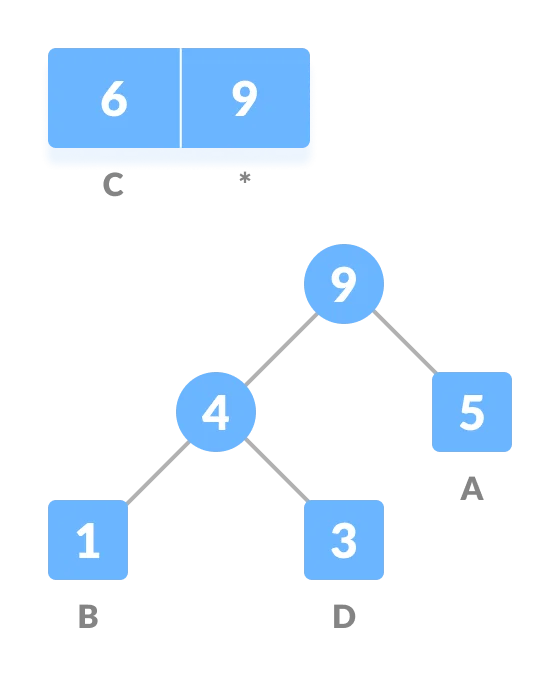

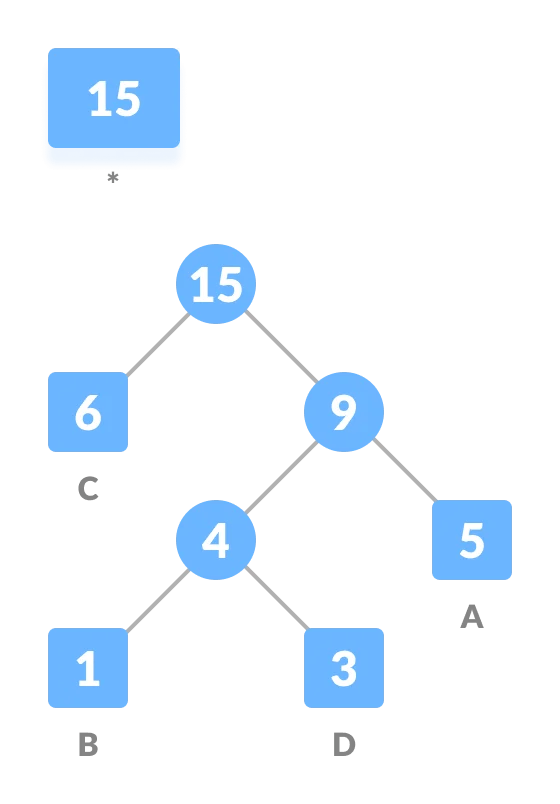

Step - 4 Create an empty node z. Assign the minimum frequency to the left child of z and assign the second minimum frequency to the right child of z. Set the value of the z as the sum of the above two minimum frequencies.

Step - 5 Remove these two minimum frequencies from Q and add the sum to the list of frequencies (* denote the internal nodes in the figure above).

Step - 6 Insert node z into the tree.

Step - 7 Repeat steps 3 to 5 for all the characters

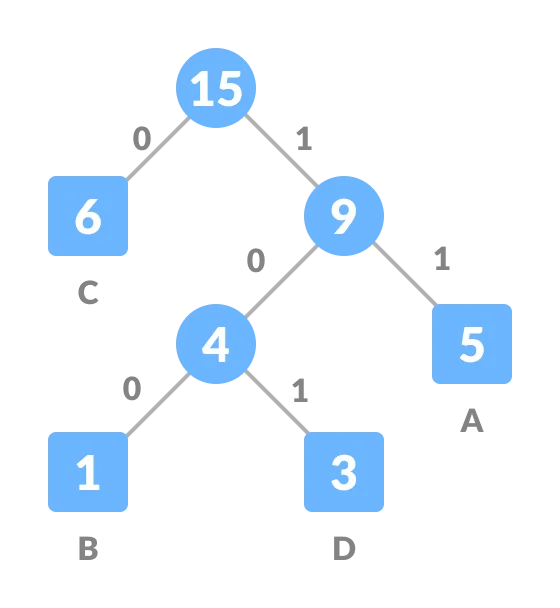

Step - 8 For each non-leaf node, assign 0 to the left edge and 1 to the right edge

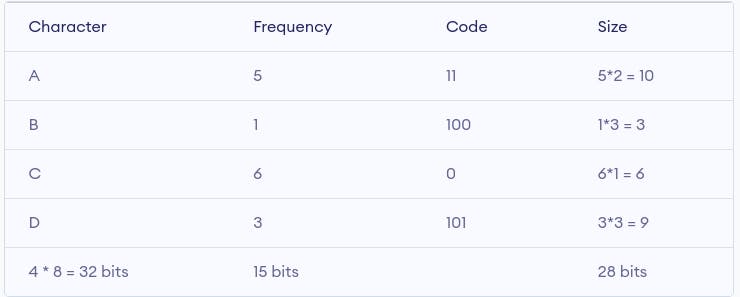

For sending the above string over a network, we have to send the tree as well as the above-compressed code. The total size is given in the table below.

Without encoding, the total size of the string was 120 bits. After encoding the size is reduced to 32 + 15 + 28 = 75.

Decoding the code

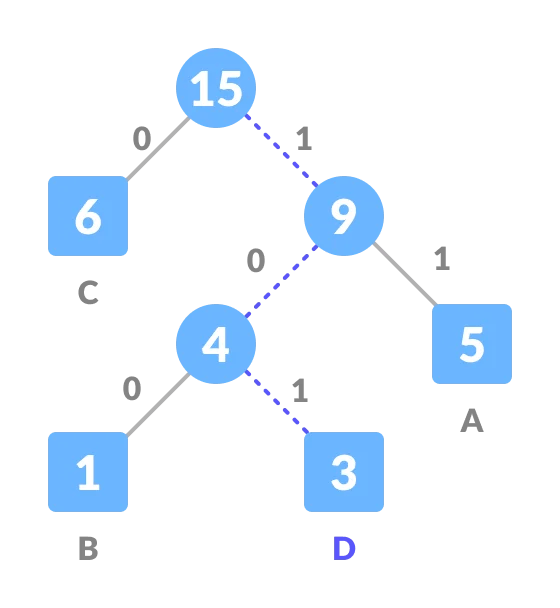

For decoding the code, we can take the code and traverse through the tree to find the character.

Let 101 is to be decoded, we can traverse from the root as in the figure below.

Implementation in Python

# Huffman Coding in python

string = 'BCAADDDCCACACAC'

# Creating tree nodes

class NodeTree(object):

def __init__(self, left=None, right=None):

self.left = left

self.right = right

def children(self):

return (self.left, self.right)

def nodes(self):

return (self.left, self.right)

def __str__(self):

return '%s_%s' % (self.left, self.right)

# Main function implementing huffman coding

def huffman_code_tree(node, left=True, binString=''):

if type(node) is str:

return {node: binString}

(l, r) = node.children()

d = dict()

d.update(huffman_code_tree(l, True, binString + '0'))

d.update(huffman_code_tree(r, False, binString + '1'))

return d

# Calculating frequency

freq = {}

for c in string:

if c in freq:

freq[c] += 1

else:

freq[c] = 1

freq = sorted(freq.items(), key=lambda x: x[1], reverse=True)

nodes = freq

while len(nodes) > 1:

(key1, c1) = nodes[-1]

(key2, c2) = nodes[-2]

nodes = nodes[:-2]

node = NodeTree(key1, key2)

nodes.append((node, c1 + c2))

nodes = sorted(nodes, key=lambda x: x[1], reverse=True)

huffmanCode = huffman_code_tree(nodes[0][0])

print(' Char | Huffman code ')

print('----------------------')

for (char, frequency) in freq:

print(' %-4r |%12s' % (char, huffmanCode[char]))

Takeaway

It is observed that, for a given sequence of source symbols, the Huffman compression algorithm produces code words that can be quickly decoded back into approximate values of the original source symbols. In addition, each codeword is produced using (relatively) few bits, which means more efficient and compact data. In practice, Huffman coding is much better than the alternative Lempel-Ziv coding schemes, especially if the data set being compressed consists of different values with very high-frequency and low-frequency components.